Maretha Prinsloo and Paul Barrett

Maretha Prinsloo

Abstract

Paul Barrett

Given the spectrum of consciousness as postulated by various consciousness theorists, cognition, according to Wilber’s All Quadrants All Levels (AQAL) metatheory merely represents a developmental “line” or “stream”, and does not encapsulate the essence or apex of consciousness. However, as proposed in a previous article on consciousness theory (Prinsloo, 2012), the fractal nature of the various “streams or lines” of development reflects that of the overall evolutionary emergence of consciousness, all of which involve processes of increasing differentiation followed by increasing integration of subcomponents.

This article focuses on cognition, which is of critical importance within educational and work environments, as well as within the context of leadership assessment and development. Up to a point, cognitive factors enable the emergence of consciousness, and very importantly, the implementation of one’s world view, or level of awareness, as covered in a previous article in this journal. This does not imply a linear relationship between cognition and consciousness. People with high levels of cognitive capability, for example, can be found at any of the various levels of consciousness as hypothesised by various consciousness theorists and developmental psychologists (Prinsloo, 2012). Here, cognition is also not merely regarded as intellectual “ability”, which has been the dominant perspective within psychology and psychometrics for more than a century.

The view proposed here involves an integration of various scientific questions posed by different research traditions within the field of intelligence and cognition, aimed at addressing the:

- “what” of intelligence as embraced by Differential psychology and the IQ tradition.

- “how” of thinking as reflected by the Information Processing paradigm, and cognitive and computational neuroscience;

- “when” of cognitive capacity explored by Developmental psychologists such as Piaget and Vygotsky; and the…

- “where” of competence as researched by the Contextualist school

While focusing on a theoretical model of cognitive processes and a methodological approach for the measurement of cognitive capabilities and preferences, as well as contextualising cognition within the real world and the broader spectrum of consciousness.

Cognition and Intelligence

Few terms in Psychology have elicited the amount of controversy that the concept of intelligence has (Whiteley, 1977). Since the 1921 symposium convened by the editors of the Journal of Educational Psychology, asking for definitions of intelligence from 14 expert contributors, there have been several later attempts to develop a definition of intelligence. For example, in 1986, Robert Sternberg and Douglas Detterman published the definitions from 24 of the leading researchers in the area, who were asked the same questions as were asked of the 14 experts in 1921. Again, as in 1921, disagreement was common. In 1996, Ulric Neisser and 8 colleagues from the APA Task Force on Intelligence published what was meant to be a definitive set of statements on intelligence, in an article entitled: “Intelligence: Knowns and Unknowns”. In 2012, Richard Nisbett and colleagues updated the APA Task Force 1996 position statements based upon incorporating new thinking and findings from a further 15 years of research into intelligence. Their article entitled: “Intelligence: New findings and theoretical developments”, used a working definition of intelligence provided by Linda Goffredson (1997):

[Intelligence] . . . involves the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly and learn from experience. It is not merely book learning, a narrow academic skill, or test-taking smarts. Rather it reflects a broader and deeper capability for comprehending our surroundings— “catching on,” “making sense” of things, or “figuring out” what to do (p. 13).

Intelligence is typically defined in terms of concepts such as learning, problem solving, memory, executive control, judgment, speed and the ability to abstract. In reviewing all these conceptions, it appears that the notion of intelligence seems quite arbitrary – or rather – the research community has failed to agree on a definition for the concept. Despite their obvious limitations, some of these definitions seem to have gained general acceptance mainly because of their parsimony and intuitive appeal. Intelligence is therefore a problematic concept in that it is of a fuzzy nature incorporating many important features for which no definite criteria can be determined.

Not only attempts to define intelligence, but the concept per se, have repeatedly been criticized. Some theorists regard the term intelligence as useful in everyday language but do not view it as constituting an adequate scientific concept, even though it is regarded as scientifically useful by others. Maraun (1998) perhaps put this most eloquently:

The relative lack of success of measurement in the social sciences as compared to the physical sciences is attributable to their sharply different conceptual foundations. In particular, the physical sciences rest on a bedrock of technical concepts, whilst psychology rests on a web of common-or-garden psychological concepts. These concepts have notoriously complicated grammars [of meaning]” (p. 436).

A technical concept is a concept defined by a specialized or expert community, and employed within a narrow, technical field of application. A common-or-garden concept, on the other hand, is a concept with a common employment in everyday life (Baker & Hacker, 1982). Common-or- garden concepts are taught, learned and understood by the person on the street, and have meanings that are manifest in broad, normative linguistic practices (p. 453).

These psychological constructs that Maraun refers to as “common-or-garden” concepts, such as “depression” “intelligence”, “dominance”, “happiness” and “fear”, are of interest to laymen and specialists alike who all have a greater or lesser degree of understanding of the meanings involved – the latter of which is largely rooted in grammar/semantics. Ter Hark (1990), amongst others, also points out that there are no rules available for the measurement of these psychological concepts as they are not embedded in normative practices of measurement.

Scientifically capturing the essence of these complex, intangible and largely descriptive concepts is one of the core challenges in psychological research and has resulted in a polarisation of approaches ranging from quantitative to qualitative observation and interpretation as well as speculation.

As early as 1965, Guttman foresaw and attempted to resolve the controversy surrounding the value of the concept of intelligence by suggesting that one should refer to the degree of intelligence of an act rather than to the concept as such. Michell (2013) has recently expanded on this position in his article entitled “Constructs, inferences, and mental measurement”:

“Psychologists named constructs using familiar mental terms, such as “anxiety”, without dwelling upon the construct’s meaning, thinking it sufficient that constructs were “operationalized” via test scores. A construct’s “nomological network” became an issue of operational “definitions”, often involving scores on a range of tests as, for example, general intelligence is thought of as “operationally defined” via factor analysis of scores on several tests (Flynn, 2007). Thought of this way, constructs are really dispositional concepts, that is, “defined” in terms of their putative effects, but, as has already been stressed, effects cannot satisfactorily define constructs. For example, general intelligence is sometimes “defined” as abstract problem-solving ability, which says nothing about the character of intelligence per se, but merely specifies it as the otherwise unknown cause of successful abstract problem-solving behaviour. Furthermore, the construct’s dispositional label (e.g., “abstract reasoning ability”), because it seems to admit of degrees of more and less, is taken, fallaciously (Michell, 2009), to vouchsafe the construct’s presumed quantitative structure (p. 16).

Given the above, it is not surprising that so many have ‘constructed’ definitions of intelligence that differ significantly in some cases from one another. From these more thoughtful philosophical perspectives, the rush to create ‘models for intelligence’ was always doomed to failure. The recent article by Ken Richardson (2013), incorporating new evidence from molecular biology and systems theory dynamics adds to a growing wisdom on these matters. However, it is important for our purposes to briefly outline how models for intelligence (as cognition) evolved; because it is this history that provided the catalyst for the expanded approach to cognition that resulted in the first author’s theoretical propositions and assessment design.

A Brief History of Models of Intelligence

Galton’s Mental Ability

Sir Francis Galton was perhaps the first scientist to approach the systematic study of individual differences in cognition within humans; his approach eventually became referred to as ‘differential psychology’. His aim was to classify, measure, and explain the variety of phenomena which were associated with behaviours and cognitive performance in humans. In his book, Inquiries into Human Faculty and Its Development, Galton (1883) set out a variety of tests and assessment methodologies for measuring basic human capabilities; these mostly consisted of sensory discrimination tasks and speed of response (reaction times and suchlike). Although he used the phrase ‘mental ability’ to indicate that which he desired to measure, he ultimately failed to construct a ‘measure’ of what he himself, and many others, accepted as ‘intelligence’.

Binet’s Scholastic Assessments

In 1905, responding to the need to assess children’s learning and intellectual performance within the Paris school system, Alfred Binet created what has since been promoted as the very first ‘intelligence’ test (Jensen, 1998). This was comprised of many kinds of task, some borrowed from Galton’s earlier work like the discrimination of weights, but the majority encompassing more cognitively complex assessments of reasoning, judgment, and verbal comprehension. But, in contrast to the ‘received wisdom’ promoted by many psychologists, Michell (2012) has noted that Binet did not claim his test measured “intelligence”:

“This scale properly speaking does not permit the measure of the intelligence, because intellectual qualities are not superposable, and therefore cannot be measured as linear surfaces are measured, but are on the contrary, a classification, a hierarchy among diverse intelligences; and for the necessities of practice this classification is equivalent to a measure” (Binet & Simon, 1980, pp. 40–41).

Psychometrics and Multiple Ability Models

Also referred to as the structural or psychometric approach and dominant during the first half of the twentieth century, this approach focuses on individual differences in order to reveal the structure of the intellect in terms of content-related abilities. It largely consisted of efforts to conceptualise intelligence by means of factor analysis (Wagner, 1987; Wagner & Sternberg, 1984). In this approach common sources of variance among individuals are researched as unitary psychological factors or attributes. The identification of “general” versus “specific” intelligence became a major issue at this point. The contributions of British theorists Spearman and Burt, and the American theorist Cattell, are relevant here. Spearman introduced the method of factor analysis, thereby laying the foundation of Differential psychology. In his later work he produced his famous two-factor theory of intelligence. This represents intelligence as comprising both general ability, designated as “g” and specific abilities, or “s”. The general factor pervades the entire range of intellectual performance, while the specific factors are relevant only to specific knowledge domains. This approach however, reflected a focus on “content”, or the “what” as opposed to “process” or the “how” of thinking. Spearman’s work has been criticised for not always being supported by the data, and the sampling of only a small part of human cognitive capability (that which is related to scholastic achievement in particular).

In an attempt to overcome the shortcomings of existing theories at that stage, Burt integrated various theoretical positions (as discussed already) and in 1949 developed a model of the structure of mind: a hierarchical exposition of mental structure. This hierarchy also consists of “specific factors” at the lowest level, which are integrated into broader categories, or “minor group factors”, which are integrated into fewer “major group factors”, which are all aspects of “g” (Sternberg, 1977a). Some of Burt’s work has been discredited on the grounds that it is unsuitable for serious scientific consideration. It has nevertheless proved to be a rich source of hypotheses for subsequent research. Vernon formulated an alternative hierarchical model at four levels of abstraction. He proposed the following hierarchy: general ability at the top, followed by: verbal educational and spatial mechanical abilities at the second level, followed by a third level of minor group factors and a fourth level of specific factors.

In the United States, Thurstone developed the statistical technique of multiple factor analysis. By doing so he directed worldwide attention to the developments in American Psychology. His theoretical position was in opposition to that of the behaviourists in that he ascribed cognitive behaviour to occurrences within the individual, rather than seeing it merely as a response to external stimuli. He also criticised the basis on which items for IQ tests were selected, and disregarded the mental age concept. Thurstone allowed empirical findings to guide the development of his theory, thereby maintaining an empiricist position. His method revealed seven primary mental abilities, namely: spatial ability (s), perceptual speed (p), number facility (n), verbal meaning (v), rote memory (m), word fluency (w) and inductive reasoning (i). According to Thurstone, general factors exist only by virtue of the correlations between primary abilities. Thurstone’s theory initially seemed to completely discredit the British theory of “g”. Cattell however, suggested in 1941 that “g” might be obtained as a second order factor among Thurstone’s primary abilities (Snow, Kyllonen, & Marshalek, 1984; Sternberg, 1977c; Verster, 1982).

Guilford’s Structure-of-Intellect (S-I) model was proposed in an attempt to integrate the proliferation of ability factors in intelligence research stimulated by Thurstone’s work. According to Guilford, abilities can be represented as a three-dimensional cube, the dimensions being:

- “operations” (evaluation, convergent thinking, divergent thinking, memory, cognition);

- “products” (units, classes, relations, systems, transformations, implications); and

- “contents” (figural, symbolic, semantic, behavioural).

The result is a three-way matrix of 120 cells, each made up of a unique combination of an operation, content and product, and each representing a unique mental ability. The proposal of such a large number of abilities as independent factors has, however, been viewed sceptically, especially since most empirical findings contradict it. Although the principles of classification identified by Guilford provide a logical and convenient frame of reference, they can also be criticised for being largely arbitrary. His model has, however, transcended the narrow “content” focus of the Differential approach, and has served as a rich source for ensuing research. The S-I model also provided a basis for the identification of various levels of complexity at which individuals prefer to process information, and thus underlies a number of complexity oriented systemic models. Guilford’s contribution in this regard has, however, not really been referenced by systems theorists whose work closely resembles it (Snow et al, 1984, Sternberg, 1977c; Verster, 1982; Wagner & Sternberg, 1984).

There have been many other attempts at creating taxonomic systems for the representation of cognitive abilities (Prinsloo, 1992). Some of these models are more useful than others.

According to Cattell, as well as Horn’s later Ïfluid-crystallisedÓ theory, a generalised factor can be subdivided into a fluid ability (Gf) and a factor of crystallised ability (Gc). Gc is primarily applicable to verbal-conceptual tasks, whereas Gf to genetic and neurological determinants as well as factors of incidental learning. Whereas verbal comprehension and the cognition of semantic relations load highly on Gc, induction, general reasoning and the cognition of figural relations load on Gf. An impressive body of evidence supports Cattell’s theory which with time he revised and extended. His choice of item content to evaluate Gf can, however, seriously be questioned – especially within cross-cultural and cross-gender milieus (Gustafsson, 1988; Sternberg, 1981; Verster, 1982).

Horn also modified his theory and in a 1986 model, analysed ability within an information processing hierarchy with levels of sensory perception, associational processing, perceptual organisation and relational eduction (Gustafsson, 1988; Sternberg, 1981).

Guttman proposed a “facet theory” in which the concept facet refers to two sets of elements: set A and set B. If set A consists of all the verbs in a language, set B consists of all the nouns. The Cartesian space AB would include all possible ab relations. Set A and set B would then be facets of space AB. A Cartesian space can, however, have any number of elements. With this model, Guttman (1965; Sternberg, 1981) thus synthesised two distinct notions into a single theory. The notions include (a) differences in the type of task material (the domain) and (b) differences in the degree of task demand (the range), which ranges from an analytical core (nature of the type of relations to be cognised) to achievement (the application of rules). A radex can thus be seen as a doubly ordered system which combines a simplex (a set of variables representing an order of complexity) and circumplex (variables differing in the type of ability they define). Guttman applied a form of non-metric scaling in the development of his radex model. It does therefore not derive solely from factor analytic research. He also made use of rank order data in studying interrelations among intelligence scores.

The hierarchical models that are based on correlational data and reflect content specific abilities can, however, be regarded as constituting the most widely held view within the Differential paradigm.

Although the Differential approach stimulated enthusiasm in research for most of the previous century and has led to the accumulation of a wealth of research findings, it can be criticised on a number of grounds (Carroll, 1974; Gustafsson, 1988; Horn, 1986; Howe, 1988; Sternberg 1977c; 1983), including:

- The primary function of the differential approach was phenomena detection, not explanation (Haig, 2005). It is a paradigm for describing aggregate differences between groups of individuals. While this is an essential component of any investigative science (phenomena detection), in essence, it is limited to description, not explanation, with a focus on the development of selection instruments rather than an attempt to explain the phenomenon of intelligence;

- the hierarchical model of ‘g’ as a general factor of intelligence is a description of a phenomenon which has practical value, but again, lacks coherent causal explanatory theory. It can be ‘constructed’ from analysis of covariance between many kinds of ability tests, or from any set of independent components which share multiple underlying causal constituents (Thomson’s ‘bonds’ model; 1916, Bartholomew, Deary, and Lawn, 2009), or from a dynamical systems model (van der Maas et al, 2006);the cultural bias of almost all ability tests;

- Although intercorrelations of ability scores are mostly positive, different sources of variance are often found. Contrary to a widely held belief, the speed and power of thinking represent two different constructs that are not necessarily linearly related;

- The Differential approach failed to consider the nature of thinking processes and merely focused on the person’s current “ability” to apply convergent reasoning (logical-analytical) processes to deal with highly structured problems in a specific content domain – thereby focusing on a highly selected and limited aspect of cognition.

The concept intelligence is thus of a descriptive rather than an explanatory nature. It is useful for labeling and predictive purposes. However, stating that someone performs well because he or she is intelligent is no more meaningful than saying that someone produces much because s/he productive. The well-known cognitive theorists, Sternberg and Feuerstein, have taken note of the more contemporary position and provide generic and qualifying descriptions of the concept of intelligence.

The position taken on the concept of intelligence in this paper is that:

- the concept is useful for practical purposes;

- it is used in a descriptive rather than explanatory sense;

- no attempt is made to construct a “correct” definition;

- the construct represents a prototype;

- the concept of intelligence primarily refers to the quality of conceptualisation processes, or the way in which a person interprets the world meaningfully (Prinsloo, 1992).

It is proposed here that this emphasis on conceptualisation can be fleshed out in terms of both structural and processing aspects. In terms of the cognitive structures involved, the degree of intelligence of a mental act may be associated with:

- the number and diversity of structures;

- structural representation at different levels;

- the potential size and complexity of the structures;

- the number and quality of inter-structural links, or the degree of integration;

- the completeness and clarity of structures;

- the modifiability, or potential adaptability of structures;

- the potential for resistance to structural interference;

- the absence of blocking / resistance to the formation of information structures;

- the strength of the tendency toward increasing order, integration and differentiation (Prinsloo, 1992).

The degree of intelligence of a cognitive act can also be related to the following functional aspects of information processing:

- the speed of processing;its goal directedness;

- the flexibility and fluency of thinking processes;

- the degree to which feedback is integrated to result in learning;

- processing power, intensity, energy and momentum;

- processing activity on all the identified levels (the levels referred to in the proposed theoretical model discussed later in this article, namely the performance, metacognitive, general or rule and subconscious levels);

- the interaction and integration of processing activities between the different levels of processing, including intuitive awareness of subconscious links; and

- the degree of automatisation of processing procedures (Prinsloo, 1992).

Cognitive Models and the Information Processing Approach

Contributions from a large number of disciplines during the 1940s and 1950s culminated in the emergence of Cognitive psychology in the mid-1960s (Dellarosa, 1988). This discipline formed part of the larger field of cognitive science which includes a wide range of subdisciplines, such as philosophy, linguistics, psycholinguistics, computer science and neuroscience, all of which focus on higher mental processes. This new approach enabled the researcher to investigate internal states and processes in a scientifically rigorous manner. It did so by focusing attention on limited cognitive tasks to facilitate the collection of data on cognitive processes.

Cognitive Psychology encompasses subdisciplines such as the information processing approach (as part of Experimental psychology), the Artificial Intelligence (AI) perspective and Neuroscience. The cognitive revolution brought an end to the Behaviourist monopoly in psychological research.

Experimental psychology (Royce & Powell, 1983) is associated with a focus on cognitive processing and encompasses the information processing approach. A variety of disciplines also contributed to the development of the information processing perspective on intelligence. These include ideas from Symbolic logic and Cybernetics on the one hand, and the Wurzburg and Gestalt schools on the other. Logic and Cybernetics contributed the idea of symbol manipulation systems. The latter involves the rigorous deduction of ideas which are represented by symbols. The Gestalt school, in turn, yielded ideas on the organization of associations and selective goal-oriented searches (Newell & Simon, 1972; Simon, 1979).

The “information processing” metaphor, however, has its origin in functionalism, which lies within the Behaviourist orientation, as well as in the computing and informational sciences (Royce & Powell, 1983). The stimulus-response concept of Behaviourism is seen as a main contributor to the development of this approach (Sternberg, 1977c). Although the Information processing approach partly developed from Behaviourism, a shift occurred from the examination of observable phenomena to the study of the unobservable.

Political, social and technological developments further impacted on the emergence and expansion of the Information processing paradigm. The inadequacies of the Psychometric approach, in particular, resulted in disillusionment and an increasing shift away from traditional psychometrics in the 1960’s, to a stronger research focus on the Information Processing paradigm.

With the appearance of the electronic digital computer, the brain-computer metaphor was soon adopted for its heuristic usefulness. In the 1930s certain similarities between neurological organization and computer hardware were noticed. The computer opened up new possibilities and enabled researchers to model cognitive processes to develop artificial intelligence (AI) systems. Initial scepticism that the computer could adequately simulate human cognition had to be overcome. Between 1956 and 1972, well developed theories of intelligence emerged to accommodate this new metaphor. These theories focused mainly on the types of induction involved in concept attainment and sequence extrapolation tasks (Dellarosa, 1988; Newell & Simon, 1972; Simon, 1979).

In 1960 Newell, Shaw and Simon, as well as Miller, Galanter and Pribram, published major works which originated the Information processing approach. They put theories forward that could be implemented and tested by means of the digital computer. Here the elementary information process (PIP) was regarded as the fundamental unit of analysis. Newell and Simon expanded their notion of an elementary information process in a 1972 publication where they introduced the concept of “production systems”.

Warnings about shortcomings inherent to the information processing approach soon followed. A close relationship was, and still is, however maintained between cognitive psychology and Artificial Intelligence.

Whereas psychometric theories differ mainly in terms of the identification of factors and the inter-factorial relationships, information processing theories differ in terms of the “level of processing” focused on, and ranges from reaction time studies on a perceptual-motor level to the level of complex reasoning and problem solving. Both hierarchical and non-hierarchical models have been proposed in this regard (Sternberg, 1983).

Although theories contributing to the information processing approach are often applauded for their precision and testability unrivalled by other accounts, they can be criticized on a number of grounds (Dellarosa, 1988; Horn, 1986; Newell, 1973), including the following:

- Even though some theorists regard the exploration of the underlying mechanisms of Cognitive Psychology as a critical prerequisite for a more holistic understanding of thinking, their constructs lack external validity. This could, however, well lead to the development of an isolated laboratory Psychology that bears no resemblance to everyday cognition.

- It is limited in terms of the type of performance studied because a large proportion of processing research makes use of a small number of units of cognitive behaviour. Such findings cannot be generalized to a wider range of intellectual application.

- The tasks developed to measure cognitive processes are of a specific nature and are only obliquely related to educational and everyday goals. Information processing research can also be criticised for not justifying the grounds on which tasks are selected.

- The rigidity of computer models contrasts sharply with the flexibility of human performance. Gestalt psychologists, for example, believe that understanding, which is a fundamental characteristic of human cognition, cannot be adequately simulated by a computer.

- The main criticism levelled against the experimental approach is that it does not accommodate the role of individual differences in processing.

To summarise, Experimental Psychology incorporates many paradigms, but tends to be dominated by the Information Processing approach. The various Information Processing theories see intelligence in terms of mental representations, the processes underlying these representations and the way in which these processes are combined. Its basic research question is concerned with the way people think. The identification and verification of hypothetical cognitive processes is regarded as a primary research goal. The methodology includes techniques such as content analysis, mathematical modelling, calculations of response time or error data and the computerized simulation of processing. The unit of analysis is the information process. It is fundamental in the sense that on a theoretical level it cannot be broken down into simpler components. This approach aims at providing a means of breaking task performance down into underlying mental processes.

The Integration of the Differential and Information Processing Approaches

The next historical phase to have emerged within the field of intelligence research is the integration of the Differential and the Information Processing approaches. The merging of the correlational and experimental approaches was inevitable considering their theoretical complementarity. Two main schools of thought have been influential in this regard. These are the “Cognitive Correlates” and “Cognitive Components” approaches as primarily published on by Prof. Sternberg from Yale. Both approaches attempt to establish statistical relations between performance on psychometric tests and laboratory tasks.

The methodology of the Cognitive Correlates approach involves the correlation of psychometric and laboratory results, mainly reaction times. Results are then factor analysed to explore common sources of variance. This is thus an attempt to identify information processing skills that predict psychometric scores. In addition, it aims to overcome criticism of the laboratory approach as an oversimplification. Research results however, are not clear and consistently produced (Egan & Gomez, 1985; Guilford, 1967; Larson & Sacuzzo, 1989; Pellegrino & Glaser, 1979; Sternberg, 1979).

Subsequent research had as its aim the analysis of more complex cognitive tasks in terms of their processing requirements. This came to be known as the Cognitive Components approach. This approach is task analytic in nature and attempts to specify the information processing components of tasks used for assessing mental abilities. It involves the theoretical and empirical analysis of task performance on standardised aptitude tests to develop performance models. Methods used range from intuitive analysis to computer simulation, protocol analysis and mathematical modelling, as well as combinations of these methods. The information processing demands of psychometric tasks are thus analysed. This approach is often referred to as S-O-R research in that the emphasis is on the stimulus and the examinee. Tasks used in these analyses include: verbal and geometric analogies, verbal classifications, syllogisms and series completions. The most prominent model that emerged within this methodological orientation is Sternberg’s Componential theory of intelligence (Chipma, Segal, & Glaser, 1985; Embretson, Schneider, & Roth, 1986; Pellegrino & Glaser, 1979; Sternberg, 1977a; 1977c, 1985).

There were also other attempts to integrate the information processing and the differential approaches. Examples are Royce and Powell’s (1983) “Systems Perspective” that focused on structure as a basis for understanding function. They looked at psychological functioning in an integrated and holistic manner focusing on styles, values, cognition, affect, sensory systems and motor systems.

Despite various attempts to consolidate the above theoretical stances, tremendous diversity still exists in the field of Cognitive Psychology. The Contextualist, Developmental, Psycho-physiological and Production (Artificial Intelligence) approaches can be summarised as follows.

The Contextualist Approach

Based on criticism of the Differential and Information Processing paradigms, the Contextualist approach to intelligence research also emerged. Contextual factors have often been downplayed by theorists whose bias towards genetics led them to reduce intelligence to a manifestation of a differentially distributed biological attributes and used academic performance as an indicator in this regard.

Criticism was also widely levelled against the Information Processing approach, specifically research that took cognition completely out of context. Contextualist educators and psychologists introduced the constructs of cultural bias and fairness, did a great deal of research on practical intelligence, and increasingly emphasised contextual factors (Berry, 1974, 1984; Ford & Tisak, 1983; Frederiksen, 1986; Gordon & Terrel, 1981; Keating, 1984; Neisser et al, 1976, 1978; Schlecter & Toglia, 1985; Scribner, 1986).

Examples of the topics researched by Contextualists are:

- the application of ethnographic and experimental techniques to study work performance;

- expert-novice comparisons of job-related skills;

- the investigation of non-academic intelligence and everyday problem solving; and

- cross-cultural factors.

The Developmental Perspective

An interest in a Developmental perspective on intelligence has prevailed throughout the above mentioned phases and has culminated in different theoretical positions (Broughton, 1981; Gallagher & Reid, 1981; Keating, 1984; Sigel & Cocking, 1977). These include emphases on Developmental Structuralism, Genetic Psychology and Genetic Developmental Epistemology. Major theoretical contributions in Developmental Psychology were made by theorists such as Piaget and Vygotsky.

Piaget took a developmental approach to the relationship between knowledge and reality. He made use of qualitative methods in studying both the underlying structure and the manifestation of intelligence as it develops through qualitatively distinct stages which are triggered by adaptive action.

According to Piaget, cognitive operations are interlinked by means of cognitive structures. A “structure” describes the relationship among mental actions, while operations are the units of logical thinking. He saw cognitive structures as objectively real, organizing features of thought and not merely as theoretical abstractions. Piaget’s theory can be regarded as constructivist in that knowledge results from a progressive “building” or structuring process: the individual adapts to his/her environment by actively organizing information through assimilation and accommodation to create a knowledge base. The functions of assimilation and accommodation are complex elaborations of the process of successive imbalance and re-equilibration.

According to Piaget the structuring of information can, however, be limited by the cognitive developmental phase involved. Development depends on four critical organizing factors, namely biological maturation, experience, the influence of the social environment and equilibration or regulatory processes. Piaget proposed that coherent logical structures underlie thought, and identified four qualitatively different developmental phases in terms of their internal structural organization. These are:

- the sensori-motor stage (birth to approximately two years of age);

- the stage of concrete operations (plus minus two to twelve years of age – often subdivided into a pre-operational and concrete operational phase); and

- the formal operational stage (from twelve years of age throughout adulthood).

Piaget’s contributions mainly provided illustrative and confirmatory evidence without validating findings. He also failed to adequately address the relationship between competence and performance. According to Piaget the concept of “structure” can be used in an explanatory manner in that deep structures explain surface appearances. This view is, however, not characteristic of Structural Functionalism, where the term “structure” refers to surface phenomena, while the term function is used in an explanatory sense. Some thus regard Piaget’s integration of structuralism and functionalism as problematic.

Vygotsky, another prominent developmental psychologist, criticised Piagetian theory for its emphasis on the individual’s construction of internal mental structures without recognition of the important role of social interaction. He also rejected the traditional emphasis on genetics and biological maturation as key contributors having potential “ceiling effects” in intellectual development, and proposed the concept of “learning potential” and “mediation” amongst others.

Vygotsky saw, as a key factor in the development of intelligence, the individual’s internalisation of social interactions. Because Vygotsky includes both developmental and contextual considerations in his approach, his work is regarded as useful and promising for the study of the nature and development of intellectual functioning.

Alongside those who concentrated on the development of broad philosophical and theoretical positions on cognitive development, such as Piaget and Vygotsky, are those researchers who have consolidated the large selection of research findings into an inclusive theoretical model. This was, for example, attempted by Horn (1970, 1978), who reviewed the literature on cognitive development over the entire life span. He found the data to be inconsistent and contradictory, but drew the following broad conclusions:

- The first two years of life seem to be characterized by the development of sensori-motor alertness.

- Development is dependent on the principles of classical conditioning and repetition.

- Around the second year of life, a developmental phase begins which continues throughout the life-span, but is most marked until the sixth year. Piaget identified this as a pre-conceptual phase. In this stage the focus is primarily on perceptual processes used in exploring the environment. Objects are represented symbolically and gradually these impressions are integrated into complex symbol systems. Language development is a related aspect which also takes place during this period.

- Clearly marked changes in cognitive structure occur during the childhood years. From about the ninth to the fifteenth year a shift occurs from the acquisition of basic concepts and symbol systems to the internalization of culture and the development of sophisticated cognitive skills. This period is characterized by the development of crystallized abilities.

- Cognitive development during the adult years has not been researched as thoroughly as that during childhood and youth. Crucial questions addressed in the study of adult cognitive development concern the structure of the intellect and the level of intelligence. Researchers have tried to establish whether cognitive structures remain stable during adulthood; whether a differentiation of preceding phases continues into adulthood and old age; or whether structural de-differentiation occurs.

- With regard to the level of intelligence, there have been attempts to establish if it continues to increase, stabilize or decline during adulthood. The evidence seems to support that of a process of structural de-differentiation.

The Developmental approach has stimulated a wealth of research, and some attempts to integrate this research on a theoretical level as well as to develop elaborate theoretical and philosophical positions. A more detailed discussion of this topic is, however, outside the scope of this article.

Early Psychophysiological Models of Intelligence

A number of speculative psychophysiologically-oriented models also emerged (Eysenck, 1986; Hendrickson and Hendrickson, 1980; Hurlbert & Poggio, 1985; Keating, 1984; Ratcliff, 1981; Simon, 1979; Verster, 1982; Vogel & Broverman, 1964). This field includes a variety of approaches based on philosophies of the mind-brain relationship and range from anatomical studies such as brain damage research and attempts to measure intelligence by means of parameters extracted from spectral decomposition of resting EEGs (electroencephalograms) or AEP (average evoked potentials), to computerized simulations of neurological processes. Most of this early work focused on biological correlates of IQ scores, with explanations couched in terms of speculative concepts of Neural Efficiency (Ertl & Schafer, 1969) and biochemical/physiological features such as myelin sheath density (Miller, 1994) and grey to white matter relative densities (Raz, Millman, and Sarple, 1990; Gur, Turetsky, Matsui, Yan, Bilker, Hughett, & Gur, 1999).

Cartesian Dualism and Structural Monism

A fundamental philosophical position underlying theoretical controversies in cognitive theory, is that of the mind-body relationship. This issue was initially highlighted by the work of Descartes and Hobbes. Descartes regarded the mind as a metaphysical entity in interaction with the material body, whereas Hobbes sees thought in purely mechanistic terms (Dellarosa, 1988). The mind-brain relationship has since been interpreted from various perspectives, including Identity, Dualism, Interactionism, Materialism, Physicalism, Mentalism (Pribram, 1986), Associationist and Evolutionist (Verster, 1982) positions. Pribram’s (1986) work on the so-called mind-brain dichotomy warrants special attention. Pribram integrated various positions on the matter with his explanation of Structural Monism. He interprets mental, or metaphysical, versus physical phenomena, as different manifestations of the same underlying structure, and therefore reflective of the observer’s perspective.

Current Neuroscience and Computational Models of Intelligence

With the introduction of new imaging technologies for in-vivo brain morphology and function during the past 15 years or so, and with increased understanding of brain connectivity, coupled with advances in computational models of cognitive processes and dynamical non-linear systems theory, there has been a revolution in terms of seeking causal explanations of human intelligence and individual differences. The older speculative model of neural efficiency was substantially reworked as neural network organizational adaptability/plasticity. This perspective was formally proposed as a model accompanied with empirical evaluation, which although not entirely successful the hypothesis of neural adaptability and biological self-organization remains tenable (Garlick, 2002; Castellanos, Leyva, Buld˙, Bajo, Pa˙l, Cuesta, Ordez, Pascua, Boccaletti, Maest˙, & del-Pozo, 2011). At the same time as Garlick’s model was being published, so was another influential book detailing evidence of biological self-organizing systems (Camazine, Deneubourg, Franks, Sneyd, Theraulaz, & Bonabeau, 2001). This property of biological systems and its implications for evolutionary models of ‘fitness’ of intelligence was further elaborated upon by Edelmann and Denton (2007). This work has been extended into the nuclear imaging of human brain tracts and networks using diffusion MRI. The Human Connectome Project researchers have recently published a series of articles showing image reconstructions of several human and animal brain tracts (Wedeen, Rosene, Wang, Dai, Mortazavi, Hagmann, Kaas, & Tseng, 2012; Toga, Clark, Thompson, Shattuck, & Van Horn, 2012). Within the Artificial Intelligence (AI) communities, computational models of cognition have now been constructed for the human brain which learn and adapt with experience, closely modelled on known brain neuroscience (Roy, 2012; Eliasmith, Stewart, Choo, Bekolay, DeWolf, Tang, & Rasmussen, 2012).

It is interesting to see how AI researchers define intelligence, for example Poole, Mackworth, & Goebel (1998):

Computational intelligence is the study of the design of intelligent agents. An agent is something that acts in an environment – it does something. The central scientific goal of computational intelligence is to understand the principles that make intelligent behavior possible, in natural or artificial systems. The main hypothesis is that reasoning is computation. The central engineering goal is to specify methods for the design of useful, intelligent artifacts (p. 1).

This recent work and thinking is now moving us into the realm of problem solving and reasoning, and a richer conceptualisation of cognition which extends beyond equating it with intelligence.

Problem Solving

A distinguishing characteristic of life is that it involves the solving of problems (Popper, 1959). Living beings solve problems both phylogenetically, that is, collectively through the adaptation of the species, and ontogenetically, that is direct problem solving through a method of trial-and-error learning. These two types of problem solving give rise, in turn, to structural changes.

The term problem solving thus encompasses a vast range of individual and collective, as well as conscious and subconscious types of behaviour. The literature dealing with problem solving in psychology, however, generally appears as a subsection of the larger body of research on intelligence. Various emphases in the investigation of problem solving can be traced historically. The analysis of problem solving processes was first carried out by Gestalt psychologists, Behaviourists and Associationists (Greeno, 1985).

Before describing current methodology and theoretical approaches to problem solving research, an exposition of the different definitions found in the literature will be presented, such as:

- All cognitive activities are fundamentally problem solving. This view based on the observation that human cognition seems to be purposeful and directed towards achieving goals and removing obstacles to attaining these goals. Some researchers go as far as to view all behaviour as an instance of problem solving. Such an encompassing view of problem solving is, however, regarded by some theorists as too abstract to be of any use empirically (Anderson, 1985; Rips, 1994).

- A problem is a task which involves making a choice where the outcome is uncertain. Problem solving then requires the construction of a mental representation of the problem and the identification of a goal which incorporates certain criteria and also brings alternative concepts related to the criteria into consciousness (Hirschman, 1981).

- A problem is an undesirable situation in which unfamiliar elements cause standard responses to be inadequate. Problem solving thus involves finding appropriate and adequate responses to change the situation (Goldsmith & Matherly, 1986).

- Problem solving is a search through a problem space where the latter consists of various problem states which refer to both physical states and states of knowledge (Anderson, 1985; Greeno, 1976; Kneauper & Rouse, 1985; Newell, 1973; Newell & Simon, 1972).

Problem solving research has thus primarily focused on the following aspects (Chi, Feltovich & Glaser, 1981; Feldhusen, Houtz & Ringenbach, 1972; Greeno, 1978; Hunt & Lansman, 1986; Schultz & Lockhead, 1988; Simon, 1979; Sweller, 1988):

- the phenomena of learning, reinforcement and extinction;

- mechanisms involved in solving well-structured problems, or laboratory tasks, such as the well-known Tower of Hanoi, Missionaries and Cannibals and Waterjug problems;

- the identification of strategic approaches and the circumstances under which they are applied;

- the modelling of expert knowledge storage, or research on the nature of expertise;

- the modelling of knowledge representation including Rumelhart and Ortony’s (1977) representation of the structural aspects of problem solving; Minsky’s (1974) frames as complex data structures for representing stereotypical situations; Schank and Childer’s (1984) scripts, plans and goals; and Siegler’s (I981) rules and principles;

- the exploration of problem solving in semantically rich domains such as: medical diagnosis, accounting and engineering (where specific and general knowledge is required), radiology and physics (where encoding and representation skills seem to be critical), chess (that seems to involve rapid recognition more so than logical analysis);

- the development and testing of production systems; and

- modelling of the understanding of natural language instruction.

This research has resulted in a number of problem solving models, reflecting the following approaches (Feldhusen et al 1972; Greeno, 1978; Knaeuper & Rouse, 1985):

- stages;

- levels of problem solving behaviour;

- the representation of information; and

- lists of problem solving processes.

Some very specific and very general models have been formulated. An example of a specific and detailed model is that of Sternberg (1977c; 1979; 1983). According to him, analogical reasoning can be analysed in terms of the following processes:

(a) encoding, which involves the translation of a stimulus into an internal representation;

(b) inference, which means discovering a rule that relates one concept to another;

(c) mapping, or the discovery of a higher rule that relates one rule to another;

(d) application, whereby a rule is generated that extrapolates from an old concept to a new concept on the basis of an analogy to a previously learned rule;

(e) comparison, which involves the comparison of answer options to an extrapolated new concept to determine which option is closest to the extrapolated concept;

(f) justification, whereby a preferred answer option is compared to the extrapolated concept to determine whether the answer option is close enough to the extrapolated concept to justify its selection as the answer;

(g) the response, which involves the communication of the chosen answer through an overt act.

An example of a general model is that of Hirschman (1981) who identified three steps of problem solving, namely:

(a) preparation;

(b) production; and

(c) judgement.

These very general and specific models are not always ideal for practical application. Although there is no single scientifically meaningful level for the study of problem solving, a number of prominent theorists consequently motivated for the development of theoretical models reflecting an intermediate level of theorising. In other words, models that are general enough to cut across many domains, but are specific enough to be operationalised and evaluated in terms of convergent and discriminant validity.

Although some of the models gave rise to the development of a number of sophisticated computer production systems applying (i) the generate-and-test method; (ii) the heuristic search method; and (iii) the hypothesise-and-match methods (Newell, 1973), research in the area of problem solving is characterised by inadequate conceptualization and a lack of empirical evidence. The further development of appropriate and effective methodology is also a crucial prerequisite for further research.

Problem solving will, for current purposes, be regarded as referring to a broad range of predominantly active intellectual and practical efforts that are directed towards changing a particular state to a different, and possibly desirable or reinforcing, end state. This mainly involves the purposive application of previously acquired knowledge and skills to task materials in a way perceived (meta-cognitively) as being appropriate.

The concept of problem solving is closely related to that of reasoning.

Reasoning

Reasoning is a central everyday activity. The very fact that the concept of reasoning refers to such a wide spectrum of behaviours makes it vulnerable to criticism in terms of its theoretical and practical utility. Some researchers fail to see any advantages in investigating diverse domains under a single heading (Rips, 1984).

In contrast to problem solving research, reasoning research has remained within the realm of inductive and deductive reasoning and has focused on critical questions such as the degree of generativity involved in reasoning, evidence for logical structures underlying thought, explanations for some of the well-researched non-logical biases (such as the atmosphere effect, lexical markings, figural bias and the caution heuristic) and the complexities of natural language.

As with most constructs in intelligence research, the concept of reasoning has been described in various ways and by representatives of different disciplines, which even include economists and mathematicians. These two disciplines both stress the role of decision making through reasoning and could well have relevance for psychology (Evans, 1982).

Some theorists conceive of reasoning in very general terms, for example, encompassing almost any process of forming or adjusting beliefs and nearly synonymous with cognition itself (Rips, 1984). An example is the view that reasoning refers to the process by which people evaluate and generate logical arguments. Reasoning is also described as goal directed mental activity aimed at arriving at a valid conclusion on the basis of a given set of facts, arguments, premises or reasons (Erickson, 1974).

An additional dimension often referred to or implied, is that of generativity or creativity (Holyoak & Nisbett, 1988). According to this view, reasoning involves the use of rules in drawing inferences that extend one’s knowledge. This view emphasizes the unique nature of reasoning in terms of its centrality in explaining human behaviour and its productive and creative capacity.

The above views and the subsequent research on reasoning are regarded by some as limited in that it has to date primarily focused on deductive reasoning (e.g. syllogisms), and inductive reasoning (studied by means of geometric shapes, dot patterns and schematic faces). Broader, more naturalistic theorising is needed.

A more detailed delineation of the concept of reasoning is provided by Sternberg (1986) who regards reasoning as the controlled and mediated application of selective encoding and selective comparison, which are essentially inductive in nature, as well as selective combination, which is essentially a deductive process. According to Sternberg, tasks that involve none of these processes do not involve reasoning per se. Tasks that involve some of these processes involve reasoning to the extent that the application of the processes is not automatic.

The content dependent nature of reasoning has sparked much interest among researchers (Evans, 1982; Rips, 1994). When defining the concept reasoning, some reference should thus be made to task content.

From the definitions to be found in the literature, reasoning can thus be described in terms of the degree to which mental activity involves the controlled selection of content (facts and premises) structured in memory, and the recombination of its elements according to certain rules in order to generate probable and necessary conclusions, or inferences, referred to in the literature as inductive and deductive reasoning respectively.

Deductive and Inductive Reasoning

Reasoning is commonly divided into the inductive and deductive types (Evans, 1982). Deductive reasoning is described in terms of proofs in which a conclusion is derived/deduced/follows from a set of given premises. Inductive reasoning attempts to derive empirical generalizations and probable premises from exemplars of phenomena (Haig, 2005). Concerning the validity of an argument, inductive inferences are strictly, not valid whereas deductive inferences are. The validity of an argument stays unaffected by whether the premises and conclusions are true or false. Invalid arguments may well lead to true conclusions. Logical systems comprise sets of rules for making valid deductions. The distinction between inductive and deductive reasoning merely reflects a philosophical difference in the criteria for the evaluation of an argument (where an argument takes the form of a set of sentences or premises, followed by a conclusion). The use of these criteria does not demonstrate a psychological distinction between the two types of reasoning because there are no grounds for assuming that different psychological processes are involved. However, if there is only one process, the details of this process should be delineated. Tasks that involve both inductive and deductive processes include many daily tasks, scientific inference and mathematical and logical proofs.

Having briefly discussed the concepts of problem solving and reasoning, the relationship between the two constructs will now be explored.

The Relationship Between Problem Solving, Reasoning and Logic

Because solving problems usually involves reasoning processes or vice versa (depending on the description of the concepts), reasoning as well as logic will be considered here.

The concepts of reasoning and problem solving have both been criticized for being too general and abstract. The range of behaviours they cover is so wide that it limits their practical usefulness. Some problem solving studies do, however, focus on clear-cut tasks. Most reasoning models are indebted to problem solving theory. Some researchers, however, prefer the concept reasoning to that of problem solving as being empirically more useful (Rips, 1994).

The concept of “logic” refers to the construction of formal systems by which given formulae can be transformed according to rules governing the manipulation of symbols. It can be seen as a sub-discipline of philosophy and mathematics and formally specifies criteria for an argument to be logically correct. A logical system thus comprises rules of inference which permit true conclusions to be derived from true premises and in this sense provides a normative model for reasoning behaviour (Anderson, 1985).

It is, however, a misconception to regard mental operations as fundamentally logical in nature. In fact, much of human thinking lies outside the boundaries of established formal logic. In contrast to the subtleties and complexity involved in human thinking, only two values, namely true and false, are permitted in standard logic (Wason & Johnson-Laird, 1972).

A proposition can be seen as a statement with truth value. Premises have truth values and are therefore propositions. The elements of premises, for example P and Q, are component propositions. The relationship between propositions is such that they can be combined to form new ones. Formal validity in logic is reflected by a form in which instances have true conclusions if the premises are true. Where the conclusions are not formal consequences of the premises, inferences may still be regarded as valid in the derivative sense if the conjunction of premises with certain conceptual truths formally imply certain conditions.

The question of whether deductive logic is a natural characteristic of human thought depends on the paradigm adopted. A rationalist approach, such as that presented by Kelly in 1955 (Evans, 1982), describes people as scientists who continually construct theories, make predictions and collect evidence. This approach assumes that people possess some system of deductive logic.

Rationalism is manifested in a number of approaches in reasoning research. Behaviourists, however, interpret behaviour as based on a personal history of reinforcement rather than on an internalised logical system. Many intermediate positions can also be identified. There are various systems of formal logic and the criteria of formal logic are relatively simple compared to the complexities of natural language (Evans, 1982).

Formal logic can thus be criticised for not accommodating language factors. Certain features of formal logic are also linguistically meaningless because logic refers to structural aspects regardless of the semantic correctness of a conclusion (Evans, 1982).

Findings regarding the dependency of thinking processes on content, further clarifies the relationship between logic and reasoning. This content dependency of reasoning processes is reflected by findings indicating for example that (a) thematic or known content seems to affect logical performance; (b) beliefs associated with certain contents may bias responses; and (c) semantic contexts influence interpretation and inference. Researchers have found that content which pertains to subjects’ personal experience may facilitate performance by triggering the use of existing representations and knowledge systems. Reasoning responses to known content are simply appropriate to the subject’s experience and may or may not be according to principles of logic. Known content does therefore not necessarily indicate reasoning ability.

This raises the question of whether all reasoning is simply a function of specific experience or whether it can be interpreted in terms of an underlying system of reasoning competence which is content independent but influenced and modified by specific content. No conclusive evidence is available on this matter as evidence on logical reasoning is insufficient to prove the existence of underlying logical competence (Evans, 1982).

The generalisation hypothesis seems to be an appropriate explanation of many of the research findings: There is the possibility that subjects simply generalise from previous experience. Various research studies have verified this by indicating that the content and direction of reasoning processes are highly stimulus bound. Evidence for formal logical competence is surprisingly lacking. This finding contradicts Piaget’s view of adult intelligence as logical. It does, however, fully support Allport’s view that the nature of cognition is content specific.

One interesting and somewhat puzzling finding is that when subjects are asked to give a verbal explanation of their reasoning responses, their verbal protocols seem to be constructed as a justification of their behaviour, and reveal distinctly logical thought (Evans, 1982). This discrepancy between performance and introspection raises the question of why this capacity for logical reasoning is not used when first solving a problem. Could it be that the greater self-awareness and metacognitive involvement of such explanations and justifications provide easier access to the rules of logic?

Existing research findings therefore seem to suggest that logical competence is not necessarily reflected in reasoning performance and that it is affected by a number of variables which influence the application of this competence. Task interpretation and cognitive style are given as examples of such moderator variables. Content also plays a central role in reasoning, regardless of the existence of underlying logical competence.

The relationship between formal logic and reasoning can be summarized with the statement that logic provides the principles by which the validity of deductive reasoning can be evaluated.

A Theory-Based Model of Cognitive Processes

A powerful research catalyst in the field of cognitive psychology can thus be found in questions regarding the structure of the mind and its bearing on reasoning and problem solving. Research questions on the topic gave rise to the development of a number of theoretical and methodological approaches – all of which are characterised by specific theoretical shortcomings. The proposed model represents a systems approach concerned with function as a basis for understanding structure, thereby accommodating both the process and structural approaches. This model is thus conceived at the interface between experimental and differential psychology and contributes towards the body of research emphasising the importance of increasing integration that is emerging as a trend in cognitive psychology.

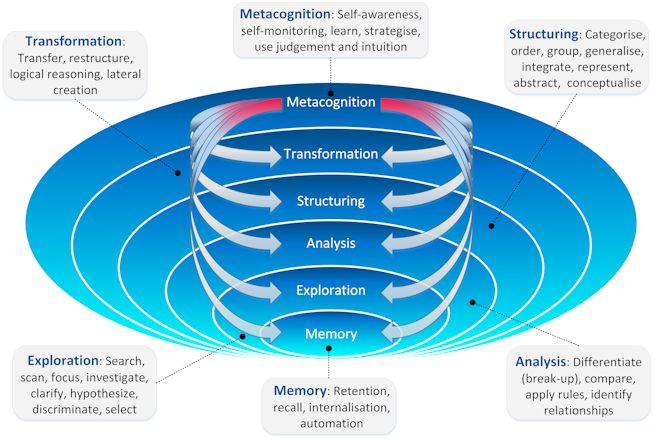

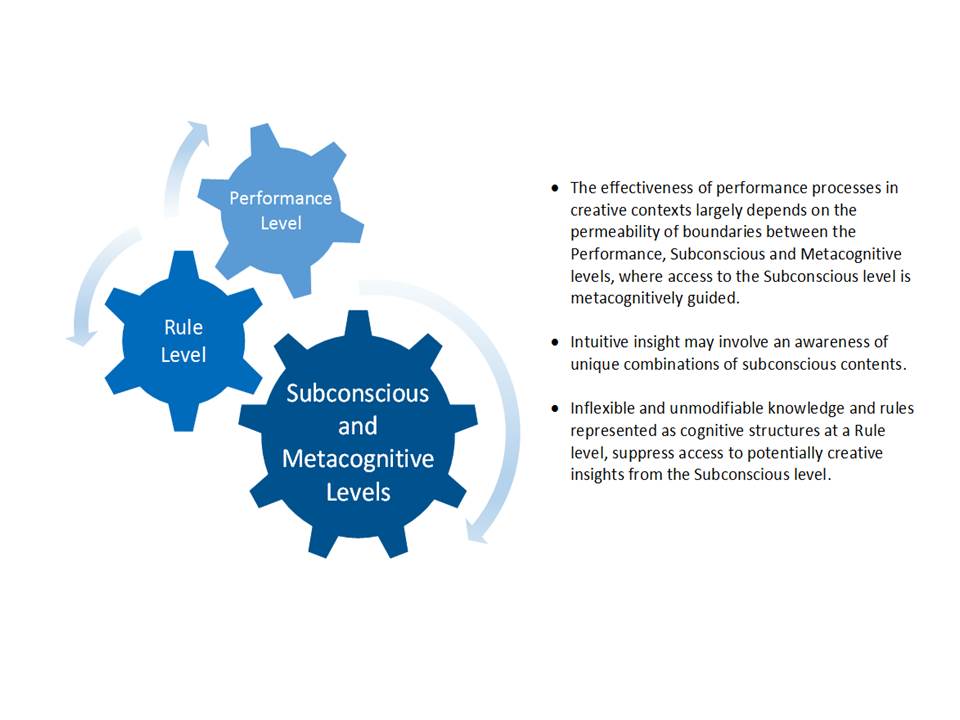

Within the model, five cognitive processes are envisaged as operating on four “levels” or “modes” of processing, The processes reflect functional unities and are regarded as having descriptive rather than explanatory value. They are defined and compared with similar constructs in the literature in an effort to expand the existing nomological network. The processing constructs are represented as overlapping fields of a matrix, a view comparable to that of “holons”, which is a term coined by Wilber (2000) to indicate the hierarchical organisation of the universe where evolution involves the emergence of increasingly transcendent yet inclusive systems. A degree of overlap between consecutive levels of systems or functional complexity is thus involved, where higher levels of organisation are dependent on preceding levels. This explanation offers a more elegant theoretical perspective than that of the stages, phases and categories models in intelligence research. Figure 1 shows the holonic representation of processes.

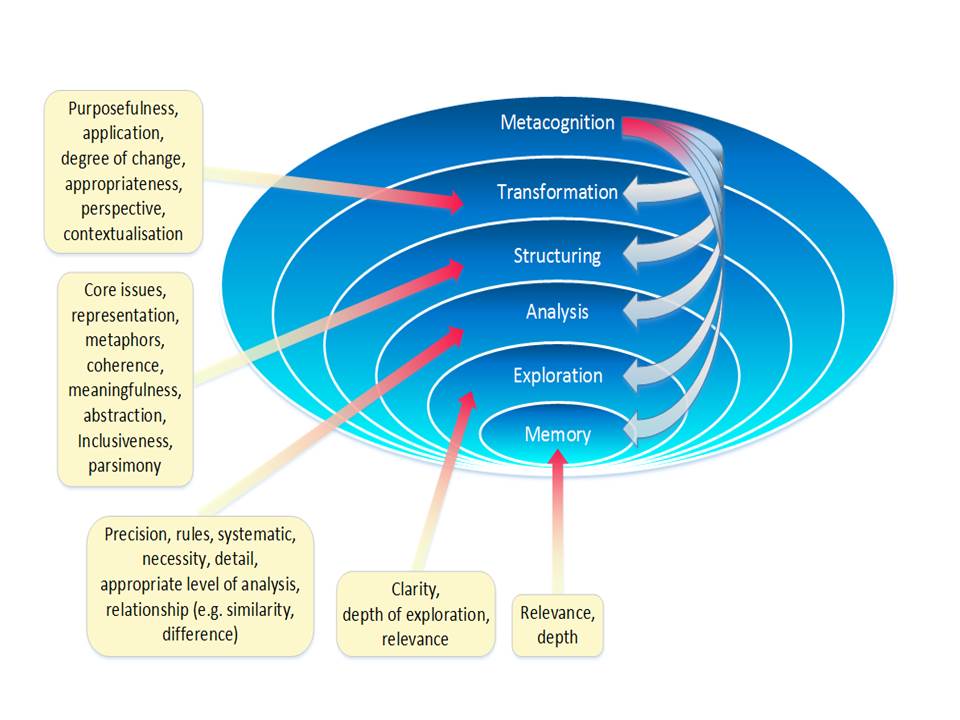

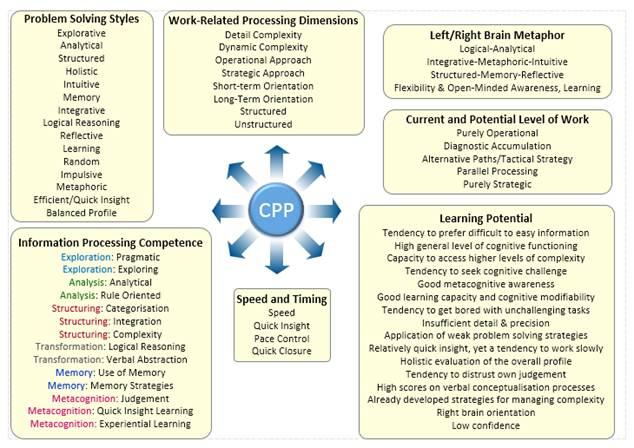

In Figure 1, Metacognition is both the encompassing awareness and process that is a prerequisite for effectiveness / capability (but one can still explore, analyse, structure, transform without a great deal of metacognitive involvement). Metacognition is involved with all the processing categories, guiding each via specific criteria which form the basis for the Cognitive Process Profile (CPP) assessment. These criteria are shown in Figure 2.

Figure 2: The Metacognitive Criteria Guiding Each Process

The four levels or ‘modes’ of processing are defined as:

|

Process |

Description |

|

Performance |

Dealing with task material (external focus) |

|

Metacognitive |

Guiding performance processes in a self-aware manner (internal focus) |

|

General/Rule |

Knowledge and experience of a content domain organised as rules |

|

Subconscious |

Vague awareness and intuitive insights derived implicitly from knowledge, experience and emotions |

Table 1: The four levels/modes of processing

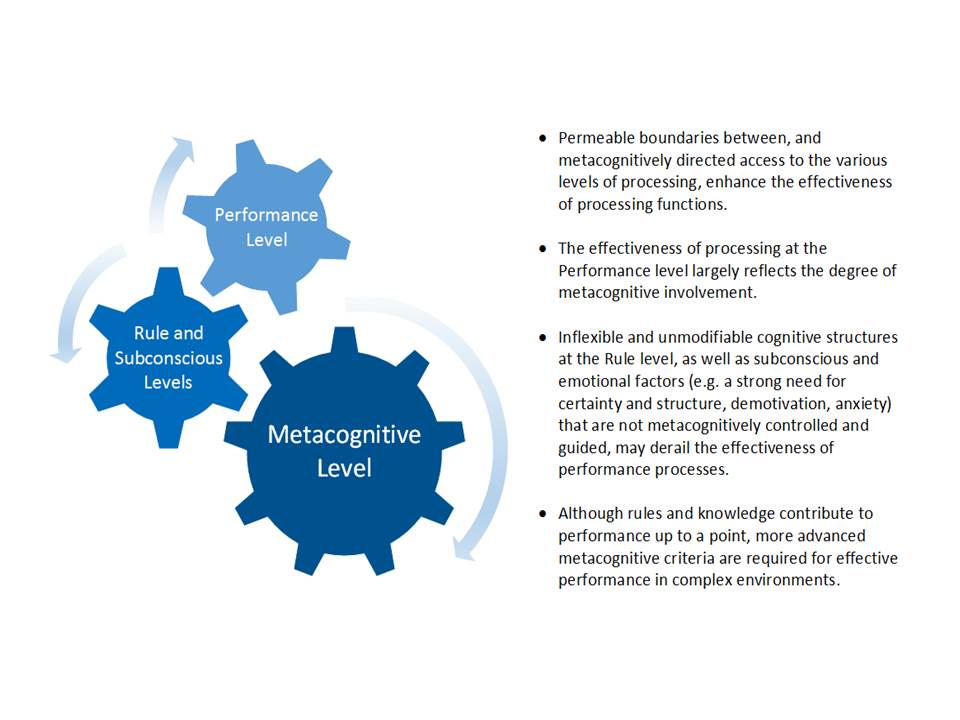

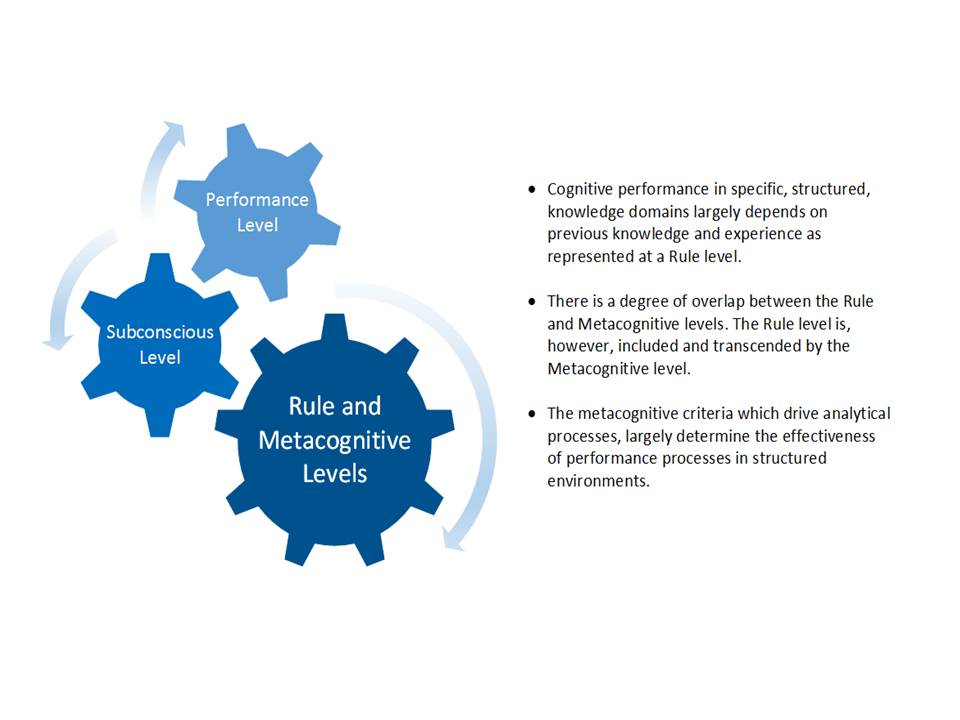

Figures 3, 4, and 5 show how within the processing model, cognition depends upon context.

The effectiveness of the contribution of processing activities at each of these levels / modes, depends on the cognitive requirements posed by the specific context. For purposes of illustration, 3 such environments and their processing requirements are graphically represented below, namely:

- complex environments

- structured environments

- innovative environments.

Figure 3: Complex environments (unstructured, vague, ambiguous, unfamiliar environments, cognitively challenging problem solving and contextualisation).

Figure 4: Structured contexts (based on knowledge and experience).

Figure 5: Innovative contexts

The processes, as functional categories, and the notion of levels can be described as follows:

Focusing and selecting involve the application of processing activities to explore complicated perceptual and/or conceptual configurations according to criteria of relevance in order to select particular elements for further processing. This can be viewed as a rapid search through a mass of relevant and irrelevant data to select that which is relevant. Focusing and selecting is mostly guided by the metacognitive criteria of relevance, familiarity, the degree or depth of exploration and clarity.

Existing theoretical constructs of processing activities that can be related to the process of focusing and selecting are: encoding (Carroll, 1981; Sternberg, 1977a); selective encoding (Sternberg, 1986); problem space exploration (Feuerstein, Rand, Hoffman & Miller, 1980; Spearman, 1923; Sternberg, 1977c; 1979; 1983; 1986; Sternberg and Rifkin, 1979; Sternberg & Smith, 1986; Wagner & Sternberg, 1984; Whimbey & Lockhead, 1980; Whiteley & Barnes, 1979); attribute discovery (Sternberg, 1977c); problem detection and recognition (Baron, 1982); detection (Resnick & Glaser, 1976); feature scanning (Resnick & Glaser, 1976); and apprehension (Carroll, 1981; Spearman, 1923).

The processing construct of linking can be described as the application of processing activities to perceptual and conceptual configurations in order to identify the elements involved, the common properties and/or relationships between these elements through the comparison of their structural characteristics. The relating, or linking, of information structures often precedes, and hence forms a basis for, most other processes. Linking and other analytical processes are guided by metacognitive criteria such as: observation of rules, detail and precision, accuracy, being systematic, the most appropriate level of specificity or generality and interrelationship (e.g. similarities, differences, degree of matching).

Existing theoretical concepts that can be compared to that of linking are: inference (Spearman, 1923; Sternberg, 1977b; 1977c; 1983; 1986; Sternberg & Smith, 1986; Wagner & Sternberg, 1984); processes such as comparing, associating, combining, transferring and matching (Cronen & Mikevc, 1972; Sternberg, 1977c; 1979; 1983; 1985; Whiteley & Barnes, 1979); selective comparison and selective combination (Sternberg, 1983; 1985; 1986); enumeration of possibilities (Baron, 1982); production (Hirschman, 1981); education of relations (Spearman, 1923); Simon and Kotovsky’s (1963) three processes involved in the solution of series problems, namely the detection of relations, the discovery of periodicity, and the completion of pattern descriptions; solution through analogy (Schultz and Lockhead, 1988); Burt’s (1949) apprehension and combination of relationships; similarity judgements (Estes, 1986); and the understanding of relations (Spearman, 1923).

Inductive reasoning tests such as analogies, series completions and classifications, and deductive reasoning tests such as linear, categorical and conditional syllogisms primarily require relational thinking. Thurstone (1938 in Colberg, Nester & Trattner, 1958) described induction as the finding of a rule or a principle and suggested that it might be comparable to Spearman’s g, a concept which is closely associated with intelligence testing.

The processing construct of structuring involves the application of processing activities to perceptual and/or conceptual configurations so as to order or group their elements to represent particular relationships and form coherent structures according to criteria of meaningfulness. Structuring activities are involved with problem representation, the subsequent utilisation of such representations, the modification of inadequate representations and the integration of knowledge structures. It may range from conceptualising simple models and ideas, to complicated and integrated networks and may incorporate aspects of other processes. The relevant metacognitive criteria that guide structuring and integration processes include: coherence, meaningfulness, abstraction, representation, pattern, core elements, inclusiveness and simplicity.

A variety of processing activities referred to in the literature can be associated with structuring. These include: representing a structure (Hirschman, 1981; Whimbey & Lockhead, 1980); co-representation formation (Carroll, 1981); defining a problem (Feuerstein et al., 1980; Resnick & Glaser, 1976); mapping (Sternberg, 1988; Sternberg & Smith, 1986); Baron’s (1982) stages of the enumeration of possibilities and reasoning; understanding (Greeno, 1978; Larkin, 1985); as well as processing activities that are often mentioned in cognitive psychology, such as categorising, grouping, ordering, chunking, organising, conceptualising and formulating.

The concept of “structuring” is also central to the schema theories that have contributed greatly to the development of Artificial Intelligence and simulation or production programs. It is also implied by most of the cognitive style theories formulated in terms of concepts such as cognitive complexity, conceptual differentiation, category width and equivalence range, conceptual integration, analytic versus relational categorisation, compartmentalisation, preferred level of abstraction and levelling versus sharpening, as was mentioned by Wardell and Royce (1978). Expert-novice studies, and in particular those in the semantically rich domains of Physics and Radiology, have indicated that expertise is primarily characterised by superior problem representation, which can be seen as a structuring activity.

The processing construct of transformation involves processing activities applied to mental representations for the purpose of changing structural elements to establish and/or change inter-structural links via logical and lateral reasoning processes. Quantitative and qualitative transformations can be identified. Quantitative transformations involve a meta-cognitively directed series of linking steps applied for transformational purposes, mostly of a rigorous logical or convergent nature. In a qualitative transformation the newly created structure cannot be linked sequentially to the previous one. Qualitative transformations often involve intuitive or abstract conceptual processing. Both metacognitive awareness and the subconscious may play a role. An example is the particle versus wave theories of light in Physics. The transformation of elements or relationships in problem solving often requires divergent thinking. Transformation usually involves complex processing and is therefore more integrated in terms of the variety of cognitive processes and levels involved. It strongly capitalises on metacognitive guidance of the thinking process via criteria of: purposefulness, difference/novelty/creativity and applicability.

The process of transformation can be related to the following concepts found in the literature: Carroll (1981) referred to the transformation of a mental representation; Baron’s (1982) stage of the enumeration of possibilities includes the transformation of structures; Guilford (1967) mentioned the product of transformation; and Greeno (1978) described transformation as the construction or generation of new relationships and components.

The processing construct of retention and recall involves the application of processing activities to perceptual and/or conceptual configurations for the purposes of both storing and retrieving information. Storing and retrieving, or memory processes may, however, involve different mental mechanisms. It is guided by the metacognitive criteria of relevance, significance, importance, familiarity and relationship.

Numerous research findings point to the centrality of memory functions in cognitive competence (Burt, 1949; Carroll & Maxwell, 1979; Estes, 1986; Eysenck, 1986; Larson & Saccusso, 1989).

According to Snow (1979), no other area in cognitive psychology has received the amount of research attention that memory functions have. This may be because working memory plays such a central role in thinking, all other processing activities probably include memory functions to some extent (Carroll & Maxwell, 1979). Although the processes of retention and recall are discussed jointly, they are, in all probability, fundamentally separate processes. Findings indicating recall as a reconstructive process support this view. Horn (1986) also observed that the retrieval of information seems to be independent of the storage thereof and has stated that individual differences on one of these functions is not fully predictive of differences on the other. Retention and recall are, however, postulated as a single processing construct on the basis of their functional nature and the fact that retention is a precondition for recall.

A literature review of memory research reveals certain trends. Early research attempts focused on quantitative phenomena in memory performance. A typical example of this type of research is the learning and forgetting curves researched during the 1950s and 1960s. Contemporary memory research emphasises the mechanisms involved in memory performance. A number of theorists are of the opinion that memory is an associative process (Anderson, 1975) and certain production systems regard productions as equivalent to associations. In addition to association based on content, covariation is also regarded as a basic relational mechanism.

Theorising on memory focuses predominantly on the construction of memory models. Memory models are dominated by the concept of stores and the transfer of information between them. Stage, level and trace theories have also been developed. It is widely accepted that memory functions can be described in terms of three levels of storage, namely sensory stores, short term memory and long term memory (Craik & Lockhart, 1972). This notion of stores is incorporated in the multi-store models. Various store models reflect the spread of activation idea (Anderson, 1985; Gitomer & Pellegrino, 1985). Spread of activation takes place along a network of paths in which concepts are represented as nodes linked to represent certain types of relationships.